Important update: On March 13, 2024, the European Parliament voted in favor of the EU AI Act. The legislation must undergo a few more steps before it is enacted, however it is expected to come into force in April or May this year and, following a 24-month grace period, will be fully in force by around June 2026. In light of this update, we have changed some parts of this article and added more information to the section Timeline for the AI Act.

On December 9th, a crucial milestone in AI regulation was reached with the provisional agreement on the Artificial Intelligence Act. This development, following extensive three-day discussions, is one of the most significant recent advancements in ensuring that AI systems operate safely and align with EU values.

Just a few steps away from becoming law, the AI Act will soon establish the most comprehensive AI regulatory framework globally, encompassing wide-ranging and impactful requirements.

This development is not only crucial for companies operating within the EU but also has far-reaching implications for non-EU companies due to the Act's extraterritorial scope.

Let's delve into the details of the AI Act and explore its implications.

What is the AI Act?

The AI Act is a set of rules designed to make sure that AI technology is used in a responsible and fair manner in the EU market.

Though a complex regulation, it would be wrong to describe it as an “AI killer". Its purpose isn't to shield Europe from abstract threats like Artificial General Intelligence (AGI) or dystopian scenarios where AI takes over the world.

Instead, it’s more accurate to think of it as a set of measures that ensure safeguards are in place when algorithms make and automatically execute decisions that could impact someone's life.

To put it briefly, the AI Act aims to introduce:

- due diligence obligations in the development of the AI system from the outset;

- mechanisms to verify the correctness of the decision; and

- avenues to hold individuals accountable if the decision is found to be incorrect.

📚 Read more: Get an overview of AI regulations around the globe

Who will the AI Act apply to?

The AI Act will apply to a wide range of players in the AI field, and beyond the EU's borders. Let's look at some examples.

Imagine you're a tech innovator in Silicon Valley and you've just developed a cutting-edge AI system. You want to sell it in Europe, but your company doesn't have an office there. No problem, you'll just need to follow the rules set out in the AI Act.

Now, suppose you're an NGO based in Berlin and you're looking to incorporate an AI system to optimize your operations. Regardless of whether that system was developed locally or on the other side of the globe, you'll need to ensure that its use aligns with the stipulations of the AI Act.

Even when an AI system is used outside the EU but its results are used within the EU, the AI Act covers that too. For instance, if a pension fund in Tokyo uses an AI system to analyze data and subsequently shares the insights with a partner in Paris, both will need to ensure they're playing by the AI Act's rules when the Paris partner uses these insights.

The AI Act casts a wide net, aiming to ensure that AI systems are used responsibly and ethically by any organization or government in the EU, regardless of where these systems are developed or deployed. This means that even businesses outside the EU will need to follow the Act's rules if they want to operate in Europe.

This caused a bit of a stir in the tech world. For instance, OpenAI's CEO, Sam Altman, has hinted that they might have to quit the EU if they can't meet the requirements of the AI Act - a strong reminder of the ripple effect this legislation is having.

Requirements for businesses under the AI Act: a deeper dive

The AI Act should be seen as a wake-up call for businesses, reminding them to make sure their AI systems are safe, clear, and respectful of their users. A quick rundown of what requirements the AI Act entails will help to get a better sense of its impact.

Risk-based approach

The AI Act classifies AI systems into different risk categories: minimal or limited, high, and unacceptable risks. This classification is based on their potential impact on users and society. High-risk AI systems are subject to more stringent requirements, while AI systems posing an unacceptable risk are prohibited. This approach is similar to the GDPR’s risk-based approach to data processing.

Prohibition of certain AI practices

The AI Act puts a hard stop on certain AI practices. In broader terms, the Act prohibits any AI system that tries to manipulate human behavior, exploit vulnerabilities, or support government social scoring. It says no to biometric categorization, predictive policing, and software that scrapes facial images from the internet to create databases, much like what Clearview AI does. It's worth noting that Clearview AI has already faced fines and bans from EU data protection authorities for such practices.

AI systems designed for emotion recognition will also be banned, especially in workplaces, education, and law enforcement. Furthermore, using AI systems for affecting people's behavior in ways that could cause harm or exploit their vulnerabilities will be prohibited. This concerns practices like political manipulation or exploitative content recommendations where individuals' vulnerabilities get exploited by promoting harmful or addictive content to them.

High-risk AI systems

High-risk AI systems are defined under Article 6 and illustrated in Annex III of the AI Act. This category encompasses a very wide range of use cases, such as employment, education, healthcare, biometric identification, and even chatbots if they interact with people in a way that could be perceived as human.

All businesses utilizing high-risk AI systems will have to meet specific requirements regarding transparency, data quality, documentation, human oversight, and robustness. They will also have to undergo "conformity assessments" to demonstrate that they fulfill the requirements of the AI Act before entering the market. This is akin to the GDPR's data protection impact assessment (DPIA) requirement for high-risk data processing.

Foundation models and general-purpose AI

AI foundation models, such as GPT-4, are large-scale models that form the technical basis for generating coherent text processing. General purpose AI systems, on the other hand, are AI systems that can be used in and adapted to a wide range of applications for which they were not intentionally and specifically designed.

Under the AI Act, providers of these AI foundation models and general-purpose AI systems will be subject to extensive documentation, transparency, and registration obligations. As such, even before a foundation model is launched, providers will need to show through design, testing, and analysis that they've mapped out and mitigated any foreseeable social and security risks. Furthermore, they will be required to register their foundation models in a newly established EU database.

Additionally, these providers will need to use only data sets that have been subject to appropriate data governance measures, which would require a thorough examination of the data sources.

On top of this, providers of foundation models used in “generative AI” - AI systems designed to autonomously generate complex content like text or video - will face additional obligations. On the basic level, they will need to ensure that their models don't generate content that breaks EU law, and publish a summary of how they use copyright-protected training material.

As for organizations that use these AI models in their operations, the AI Act does not directly impose obligations on them. However, they might be indirectly affected by the obligations imposed on the AI providers. For example, if an organization uses an AI model that has not been properly registered or does not meet the required standards, it could face legal and operational risks.

Supervision and oversight

The AI Act sets up the European Artificial Intelligence Board (EAIB). The EAIB's job will be to offer guidance, share effective strategies, and make sure the AI Act is applied consistently across all EU member states. This board is modeled after the European Data Protection Board (EDPB), which was created by the GDPR.

The European Commission also plans to establish the European AI Office within its structure. The AI Office will focus on supervising general-purpose AI models, collaborating with the EAIB, and consulting with a panel of independent, scientific experts for support and guidance.

Additionally, each EU member state will have to designate authorities responsible for monitoring and enforcing compliance with the proposed AI Act, similar to the competent authorities required by the GDPR. This requirement ensures that there is a clear point of contact for businesses and provides a mechanism for enforcing the AI Act's requirements.

📚 Read more: Discover if a DPO or an AI Ethics Officer can help your business stay compliant with AI-regulation

Fines and penalties

Non-compliance with the AI Act can lead to hefty fines. Depending on the severity of the infringement, fines can go up to 7% of the global annual turnover of the legal entity responsible for the AI system. This is a significant increase from the GDPR’s fines that go up to 4% of the global annual turnover.

Key concerns around the AI Act

As can be seen from our breakdown of the AI Act requirements, it's clear that it's asking a lot from businesses. In addition to the challenge of meeting the burdensome requirements, many other problems may arise as to how to correctly interpret and implement some of its provisions.

For example, the Act requires AI providers to ensure “high-quality data for the training, validation, and testing of AI systems”. On the surface, this seems like a reasonable ask. However, the catch is that the Act doesn't clearly define what constitutes "high-quality data". This lack of clarity and specific standards could potentially create loopholes for businesses.

Adding to the challenges, the enforcement aspect of the AI Act can also become a problem. With its enforcement structure closely resembling that of the GDPR, one could expect, at least at first, a repeat of past issues such as inconsistent application and resource constraints. If we look back at the early days of the GDPR, which came into effect in 2018, we see that fines and enforcement actions took considerable time to gain momentum.

Probably, we would be looking at a similar story with the AI Act. It's a big ask for EU member states, both in terms of budget and administration, and some countries might find themselves on the back foot. Furthermore, enforcing the AI Act will demand a deep understanding of AI development from the regulatory bodies. The task of locating and recruiting specialists to conduct audits could pose an even greater challenge for effective enforcement.

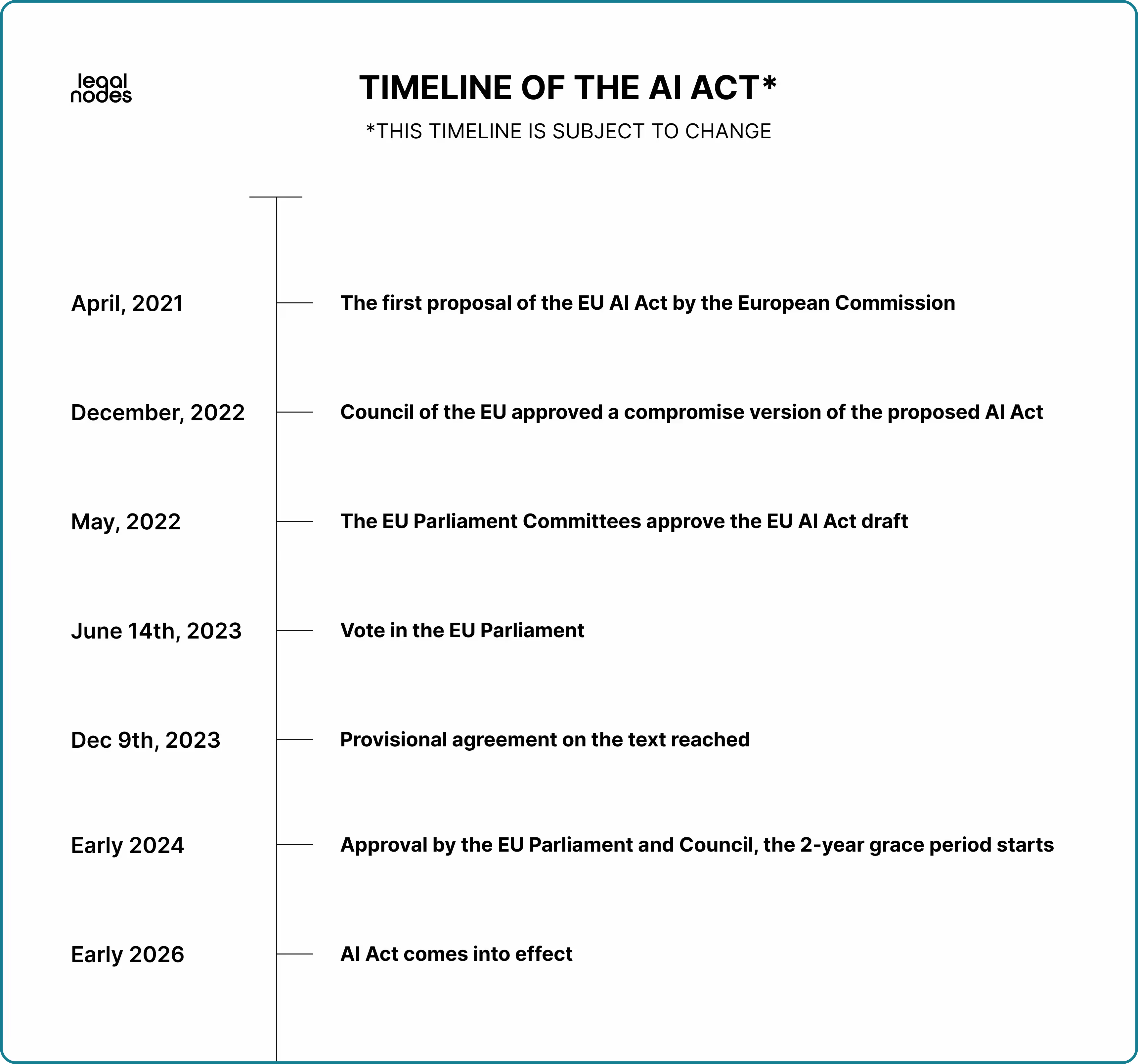

Timeline for the AI Act

Following the European Parliament's vote in favor of the EU AI Act on March 13, 2024, we are now able to provide an updated timeline of anticipated events.

After the political agreement was reached to introduce legislation governing AI, two steps were required for the AI Act to become law: finalizing technical details of the text and securing approval from the European Parliament and Council. These events were expected to happen in the first part of 2024, and as the European Parliament has voted in favor for the Act, here's what will happen next:

- In the next few weeks, the Act will undergo a final lawyer-linguist check.

- The European Council will provide formal endorsement for the new laws.

- Sometime in April or May, the EU AI Act will be published in the Official Journal of the EU.

- 20 days after the date of publication, the Act will come into effect.

Once these new laws are first enacted, a two-year grace period will commence. This will give businesses some breathing room to make changes to comply with the AI Act. This grace-period also introduces certain aspects of the new rules in phases, for example:

- Six months after coming into force, bans will be applied on prohibited practices.

- Nine months after coming into force, new codes of practice will be introduced.

- 12 months after coming into force, new general-purpose AI rules including governance will be introduced.

As the EU AI Act is set to come into force some time in May or June 2024, most businesses now have a 2-year period to ensure that they become fully compliant with the EU AI Act. Some businesses may have a much shorter deadline (e.g., six months), for example in cases of prohibited AI systems. Other businesses using AI that is already regulated under EU law may have longer periods (e.g., 36 months) to achieve compliance. AI systems that fall under the definition of a regulated product or those used as a safety component in a regulated product may qualify for longer compliance periods, however businesses should carefully check the rules to ensure they achieve compliance by the required deadline.

Please note: the timeline below states that the EU AI Act will come into force in "Early 2026". Recent changes now indicate that the Act will come into force between April and June of 2026.

Preparing for the AI Act

The European Parliament's majority vote in favor of the EU AI Act on March 13, 2024 cements the fact that this legislation is just around the corner. Businesses should act now to prepare for compliance requirements that this new regulation may impose.

The AI Act might feel like a steep hill to climb, but there's no time like the present to start the ascent, especially with the global AI regulation landscape shifting at a rapid pace. Moreover, as the drive towards more ethical AI gains momentum, an increasing number of people worldwide are becoming aware of how automated algorithms can affect their lives.

We're on the cusp of a new era - an era of trustworthy and responsible AI. A clear sign of this is the recent pledge by the G7 nations to develop global AI standards. This commitment signals a future where it will be commonplace to expect AI systems to be ethical, respect human rights, and be transparent and accountable. The expectation that AI systems should be reliable, robust, and designed and used in a way that respects the rule of law, human rights, democratic values, and diversity will soon be the norm.

In addition to the EU's efforts, the Council of Europe is also actively working on the AI Convention. The convention, which is still under development, aims to establish binding common principles and rules for AI. While it's meant to go hand in hand with the EU's AI Act, its reach will be broader, extending to all 46 members of the Council of Europe, including the UK.

So, while the AI Act might seem like a tough challenge, it makes more sense to view it as part of a larger and inevitable global movement towards trustworthy and responsible AI. And with a two-year grace period providing a bit of a cushion, now may be the ideal time for businesses to gear up for this new chapter in AI governance.

Book a consultation with one of the Legal Nodes' legal professionals to kickstart your journey.

Disclaimer: the information in this article is provided for informational purposes only. You should not construe any such information as legal, tax, investment, trading, financial, or other advice.

Kostiantyn holds a certification as an Information Privacy Professional in Europe (CIPP/E). Fuelled by his passion for law and technology, he is committed to the protection of fundamental human rights, including data protection. He adds a musical touch to his repertoire as an enthusiastic jazz pianist in his spare time.