It’s 2023 and once again, we are actively discussing how AI is replacing lawyers. In 2016, the legal industry was stirred by the first mass media headlines about AI replacing lawyers. It was the year when chatbots started to appear, interacting with users and performing simple tasks in a "robotic" manner. In the legal industry, chatbots were launched to answer basic legal questions, assist with the preparation of simple documents, and undertake other simple tasks.

Looking back, it’s easy to see how, at that time, the innovation only affected the user interface interaction with algorithms. Behind the scenes, most of these chatbots still relied on linear algorithms. By the end of 2017, the hype around legal chatbots subsided, and lawyers returned to writing emails and manually preparing documents in MS Word.

However, at the beginning of 2023, headlines about AI replacing lawyers have resurfaced. This time, it's not just chatbots, but Large Language Models (LLM). Is this just another hype that will soon fade, or a more serious challenge for the legal profession?

We’ll explore this question in this article.

What is a LLM and how does it differ from previous AI developments in the legal field?

A LLM (Large Language Model) is an algorithm trained on a vast amount of data that can recognize and generate text in various ways. Today, LLMs are being developed by big tech companies (Google, Amazon, Meta) as well as open-source communities (like Dolly). One of the most popular and powerful LLMs is GPT, developed by OpenAI, with ChatGPT as the interface for user interaction.

The distinctive feature of a LLM compared to other AI technologies is its ability to work specifically with text as input/output data. This is why it's called a big language model. If you have already had the opportunity to test ChatGPT, you may have noticed how LLM GPT can take text inputs, process them through multiple layers of internal filters trained on large datasets, and produce high-quality and accurate outputs (such as answers to complex questions, draft emails, articles, presentations, and more).

This is the main difference between LLMs from earlier AI developments: these new LLMs have the ability to accept "non-technical" commands through a conversational interface (like ChatGPT), analyze them, and accurately execute them (generate text output).

💡 Worth checking: explore global AI frameworks and learn about AI risk assessments for businesses

The revolutionary aspect of LLMs

As mentioned earlier, the revolutionary aspect of a LLM is its ability to accept "non-technical" commands and, in a conversational (non-technical) mode, improve the results of their execution. This breakthrough can be compared to the revolution in personal computers in the late 80s when the command-line interface (used only by tech geeks familiar with command libraries) was replaced by a graphical user interface with folders and files, which we are accustomed to on our laptop screens today. At that moment, computers transformed from devices for tech geeks into mass-market products for users without technical backgrounds who started using them both in work and everyday life.

Since the beginning of 2023, we have been witnessing a similar revolution. AI technology (specifically ChatGPT and the like) has become accessible to a wide range of non-technical users, thanks to LLM's ability to accept tasks (commands) in a conversational format, provide output data (generated text), and improve these results based on non-technical user comments/edits.

📚 Explore more: addressing ChatGPT privacy risks and ensuring GDPR compliance with OpenAI’s API

This has made the AI user experience similar to working with a personal assistant. Just like a PA, the AI assistant can receive task instructions, like drafting an email, then prepare initial results, and iterate them based on comments and edits provided by a manager. The manager doesn’t need to provide this feedback using complex technical commands, or even with some basic programming knowledge. Instead a manager can just type a command into ChatGPT that starts with "act as my personal assistant and prepare a draft email for me...".

How can LLMs impact knowledge workers (including lawyers)

Now that we have briefly discussed what a LLM is and how it works, let's analyze what knowledge workers, particularly lawyers, can expect from the development of this technology in the near future. To understand the potential of LLMs in this field, let's compare the similarities and differences between LLMs and lawyers when they work with texts that require specialized knowledge, like legal texts.

Let's start with lawyers. When working on client tasks, a lawyer goes through three stages of mental activity:

- They refer to their own experience/memory/other sources (e.g., the internet) to acquire legal knowledge (laws, regulatory explanations, case precedents, procedural stages, practical experience).

- They activate skills to apply legal knowledge to solve client tasks (the ability to analyze the client's situation, identify client needs/tasks, and find/apply relevant legal rules to the client's case). Lawyers acquire these skills in law schools and refine them over years of legal practice.

- They activate the ability to formulate (express) the results of solving the client's task in written form (prepare a document containing a legal opinion, a drafted contract, recorded consultation, etc.).

Can LLMs handle tasks as well as lawyers do?

Analyzing the work of LLMs allows us to conclude that the stages of mental activity required to solve a client's task are quite similar for both LLMs and lawyers, namely:

- Receiving and analyzing the task (for AI, these are called "prompts").

- Referring to a knowledge base to find answers (datasets on which LLMs have been trained, the internet, AI's contextual memory).

- Activating "skills" to analyze/compare/process information and generate text output that addresses the task (these "skills" in AI consist of a multi-layered system of AI filters trained and adjusted over years to achieve high accuracy).

- Providing textual output (thanks to being a language model, AI can generate human-readable results like texts, presentations, tables, etc.) and iterating on them based on user feedback and comments.

Does this mean that AI can soon replace lawyers? Let's explore further.

Does LLM pose threats to the legal profession today, and if so, what are they?

It seems that LLMs already replicate many aspects of a lawyer's work quite successfully. Considering the speed at which existing LLMs are "learning" and the pace at which more advanced versions are being developed (the launch of GPT3 to GPT4 took only 33 months, and the leap in quality improvement between these versions can be described by comparing the number of parameters of each version: 175 billion in GPT3 to 100 trillion in GPT 4), the question arises: What risks does this rapid development of AI and LLM pose to the legal profession? Can this technology really replace lawyers in the near future?

In our opinion, AI definitely cannot replace lawyers in the near future. Currently, the main reason for this is that even the most advanced LLM models struggle with abstract concepts. They perform poorly in mathematical calculations, lack an understanding of formal logic laws, and as a result, when faced with tasks that require "thinking out of the box," they offer solutions based solely on previous "experience" from the datasets they were trained on (which typically consist of digitized books, Wikipedia, and internet data from the past 30 years).

The aforementioned limitation in LLM leads to frequent "hallucinations" in AI responses when working on complex tasks. And as known, constructing complex legal cases is virtually impossible without the abstract concepts and logical principles described above.

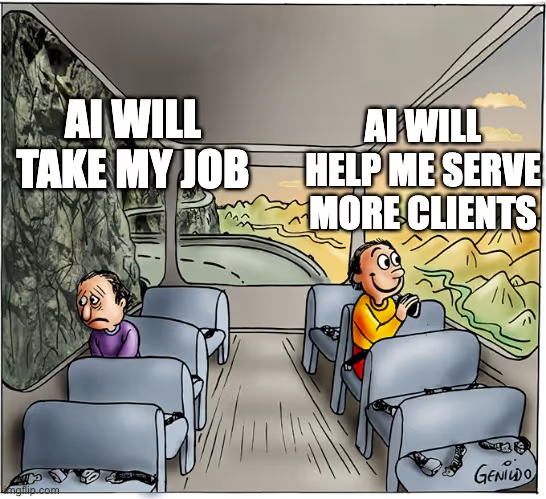

Lawyers who ignore the development and use of AI technologies may lose clients

However, in our opinion, lawyers shouldn’t be breathing big sighs of relief just yet. In the legal profession it’s very well known that the construction and resolution of complex legal cases constitute only a part of a lawyer's workday. The rest of the time is occupied by client communication, email writing, legal team management, billing, and other tasks. And, as we have recognized above, AI already handles many of these administrative and operational tasks much faster and much more efficiently than lawyers.

From this, a potential risk of losing clients (and losing competitiveness in the market as a whole) emerges for those lawyers who ignore the use of AI tools, at least for automating administrative tasks in their own legal practice.

So, let’s look at five ways lawyers could lose clients, should they choose to dismiss using AI tools in their daily work processes.

1. Clients will not work with lawyers who bill for tasks that AI could handle

A ten-minute phone call, sending an email, providing a template for a document—these are a few examples from lawyers' billing reports that often raise questions from clients. Questions like “Why should I pay for this when AI can do it for free?” and “Why should I pay lawyers’ rates when this work could be automated at a fraction of the cost?"

It is understandable that from the lawyer's perspective, this is time spent on client work that may be “non-legal” but should be compensated since it was performed at the client's request. However, will the above thesis sound as valid to the client when they receive a monthly report with billed hours for tasks that AI could have automatically completed within minutes? Ultimately, the answer lies with the clients' judgment.

2. Client expectations regarding communication speed and the efficiency of legal services will only grow from now on

And it will push out lawyers who do not utilize AI, as these lawyers will not be able to compete with AI-enabled colleagues in terms of speed and efficiency in providing legal support to clients.

With the development of digital technologies, the pace of business processes has significantly increased. We have come to expect a response from online service support within 10-15 minutes or product delivery within a working day. However, when it comes to communication with lawyers, answers often arrive following a week-long interval.

This gap in client service between the legal field and other (IT-related) industries is keenly felt in particular by founders of technology companies who have experience in building their own support teams and know how to optimize the speed and quality of responses using AI. Such founders will find it puzzling why a lawyer takes up to three working days after a client call to prepare and send a response that could be generated and sent in just 5 minutes using AI.

3. Lawyers who do not utilize AI for automating non-billable tasks will not achieve the same commercial efficiency as their AI-enabled colleagues

As a result, they will be unable to compete with their peers in terms of price for their services.

In a lawyer's standard workday of 8 hours, half of that time is typically spent on non-billable (administrative) tasks that do not increase the revenue of the legal practice. This, in turn, affects the cost calculation of an hour of work for the lawyer (the more non-billable work there is, the higher the cost of an hour of work, and the higher the cost of services for clients).

Therefore, those lawyers who are the first to learn how to reduce the number of non-billable hours using AI will be able to lower the cost of work hours for their teams, which in turn will enable them to offer clients more competitive prices for their services.

4. In legal sales, the customer acquisition cost (CAC) for lawyers who don’t use AI will be significantly higher compared to AI-enabled colleagues

As a result, these lawyers, with the same budgets as their AI colleagues, will sell fewer services and clients will cost them more.

No legal sale takes place without several introduction and sales calls between the lawyer and the potential client. This situation is observed in practically all knowledge work-related fields. Before a client is willing to engage a consultant's services, they want to ensure the expertise of the consultant.

For lawyers, this usually involves non-billable work that is part of the customer acquisition cost (CAC). The more sales-related work, the more non-billable hours, and the more expensive it is for a lawyer to acquire a client. Those lawyers who are the first to master AI sales agents, allowing the client to initially interact with an "AI clone" of the lawyer before making the final intro call and discussing collaboration, will be able to significantly reduce their CAC. They can also increase the sales capacity of their practice (attracting more clients without increasing the team and resources).

5. Lawyers who do not use AI will not be able to scale their legal practices as quickly as their AI-enabled colleagues

As a result, they will be unable to effectively compete for capturing new service niches.

"AI clones" for lawyers have the potential to apply not only to sales-related work but also to the actual process of legal support. Considering the pace of development and improvement in AI technologies, there is a high likelihood that AI co-pilots for lawyers will significantly accelerate the process of providing legal services and equip lawyers with "superpowers" that enable them to quickly scale their legal practice and capture new market niches without increasing the legal team, which is always bound by the 8-hour work limit each day.

What work can lawyers already delegate to AI to avoid losing clients in the future?

Currently, the aforementioned reasons and risks of AI for the legal profession are more hypothetical forecasts/predictions than statistically supported facts. However, among founders of technology companies, there is already an unofficial recommendation: "If your lawyer does not utilize AI in their work with you, it means they will overbill you for tasks that AI can handle much more accurately today."

Legal Nodes is aware of this because we have been working with founders for the last 5 years, helping them solve multi-jurisdictional legal issues. During this time, we have collected a lot of analytics and data that effectively illustrates how demanding these founders are when it comes to the efficiency of legal tasks and the speed and quality of legal support overall.

How Legal Nodes helps lawyers prepare for the AI transformation of the legal profession

Legal Nodes is a two-sided legal platform that helps founders of international companies solve legal issues with help from the most accurately matched local legal providers. We’ve been receiving requests from founders who wish to be matched with AI-savvy lawyers. We’re taking these requests as an important signal that the times are a-changing and we’re launching a new initiative to assist service providers from the Legal Nodes Network in researching and implementing AI technologies in their own practices.

At Legal Nodes, we believe that ultimately this will lead to win-win results for both sides of our legal platform, where:

- Founders will receive more cost-effective legal services with the help of AI (avoiding overbilling) and enjoy better client service (due to a significant increase in communication speed and responsiveness).

- AI-savvy lawyers will gain a competitive advantage in attracting clients compared to colleagues who do not use AI. They will also become more commercially efficient (reducing non-billable hours, increasing their capabilities, and improving the profitability of their own practice).

So, how can we achieve this win-win result? We’ve got the answer:

6 ways lawyers can start implementing AI into their own practices

- Familiarize themselves with the use cases of AI in a lawyer's work: legal prompt engineering, development of legal co-pilots, creation of legal AI agents.

- Prepare and structure an internal knowledge base accumulated through legal practice (proprietary data) to be used for training and working with AI tools.

- Start testing AI tools on practical use cases (automated generation of follow-ups, auto filling template documents, etc.), and begin building a culture of AI usage within the company through training and incentivization/motivational programs.

- Consider hiring prompt engineers (or developing this expertise internally) in order to create and test the most effective prompts for your unique workflows and maximize the benefits of using LLM-based tools.

- Establish the position of an AI Ethics Officer in the company, who will not only implement new AI tools in the company's work but also ensure compliance during their use (addressing AI ethics, working with personal data/confidentiality, protecting proprietary data, and other intellectual property of the company).

- Develop a long-term strategy for the use/integration of AI in legal practice, which will involve strengthening the legal team with technical specialists (data science, machine learning) to enable the development of more complex and customized AI solutions (AI co-pilots, AI legal agents, legal AGI, etc.).

Disclaimer: the views expressed in this article are those of the author and do not necessarily express the views of Legal Nodes LTD.

The information in this article is provided for informational purposes only. You should not construe any such information as legal, tax, investment, trading, financial, or other advice.

Nestor is a Co-founder & Head of Web3 Legal at Legal Nodes. Having over eight years of legal consulting experience, Nestor loves working with innovative startups and Web3 projects, helping them navigate the regulations and scale on global markets.